A complete guide to data warehousing with Snowflake

What is data warehousing?

Data warehousing is a process of collecting and managing data from various sources to provide meaningful insights for enterprises. A data warehouse is a centralized repository designed to integrate, store, and maintain historical data from multiple operational systems and external sources.

The primary purpose of a data warehouse is to support business intelligence (BI) activities, including data analysis, reporting, and decision-making processes. It consolidates data from different sources, ensuring consistency, accuracy, and a single source of truth for the organization.

Today, data warehousing plays a crucial role in modern data management strategies. It enables organizations to:

- Integrate data from disparate sources: Data warehouses consolidate data from various operational systems, such as transactional databases, ERP systems, CRM applications, and external data sources, into a unified repository.

- Support advanced analytics: By storing historical data in a structured format, data warehouses facilitate complex analytical queries, data mining, and advanced analytics techniques, enabling organizations to uncover valuable insights and make informed decisions.

- Improve data quality and consistency: Data warehouses implement data cleansing, transformation, and standardization processes to ensure data quality and consistency across the organization, reducing redundancy and inconsistencies.

- Enable faster reporting and decision-making: With data readily available in a centralized repository, data warehouses accelerate reporting processes, allowing organizations to generate timely and accurate reports for informed decision-making.

- Maintain historical data: Data warehouses store and maintain historical data, enabling organizations to perform trend analysis, track performance over time, and gain valuable insights from past data.

Data warehousing is a critical component of modern data management strategies, enabling organizations to leverage their data assets effectively, gain a competitive edge, and drive better business outcomes through data-driven decision-making.

Overview of Snowflake and its architecture

Snowflake is a cloud-based data warehousing platform that offers a unique approach to data storage, processing, and analytics. Unlike traditional on-premises data warehouses, Snowflake is built entirely on the cloud, leveraging the scalability and flexibility of cloud computing.

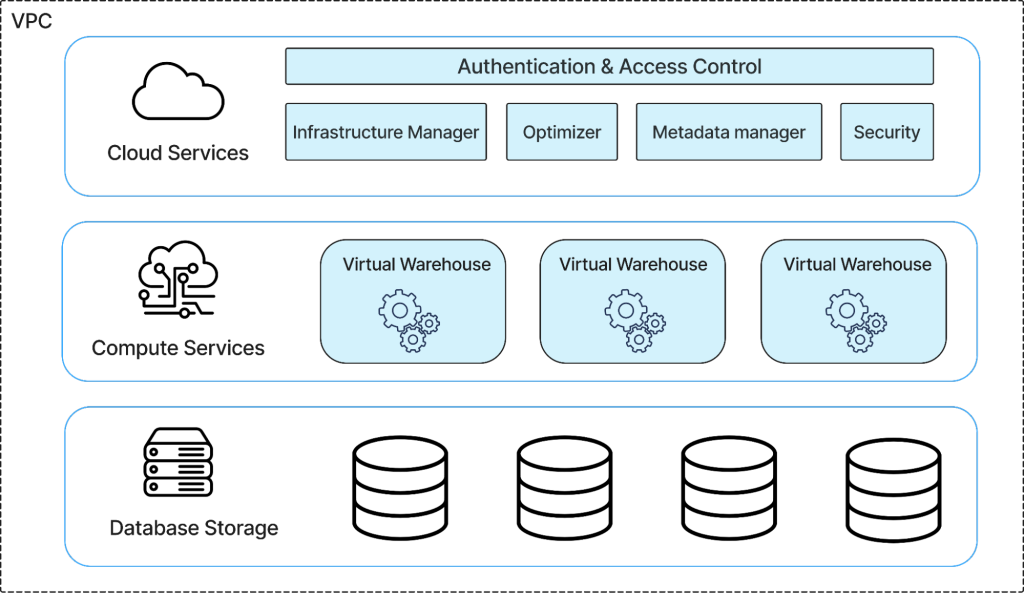

Separation of Storage and Compute

One of the key features of Snowflake is its architecture, which separates storage and compute resources. This separation allows for independent scaling of each component, enabling users to elastically scale up or down their compute resources based on demand, while their data remains persistent in the cloud storage. This approach eliminates the need for time-consuming and resource-intensive tasks like provisioning, patching, and upgrading hardware or software.

Multi-Cluster Shared Data Architecture

Snowflake also introduces the concept of a multi-cluster shared data architecture, where multiple compute clusters can access the same data simultaneously. This architecture enables efficient data sharing and collaboration among teams, eliminating the need for data silos and enabling faster time-to-insight.

Support for Semi-Structured Data

Another notable feature of Snowflake is its support for semi-structured data, such as JSON, Avro, and Parquet, in addition to structured data. This flexibility allows organizations to ingest and analyze a wide variety of data formats without the need for complex data transformation processes.

Built-in Data Security and Governance:

Snowflake’s cloud-native architecture also provides built-in data security and governance features, including end-to-end encryption, role-based access control, and audit logging. These features help organizations maintain data integrity and comply with regulatory requirements.

Setting up your first Snowflake data warehouse

To get started with Snowflake, you’ll need to create an account. Next,

Access the Snowflake console

- Once your account is activated, you’ll receive a welcome email with a link to the Snowflake console.

- Click on the link to access the console, and log in using your email and password.

- You’ll be greeted by the Snowflake console, which is your central hub for managing your Snowflake data warehousing environment.

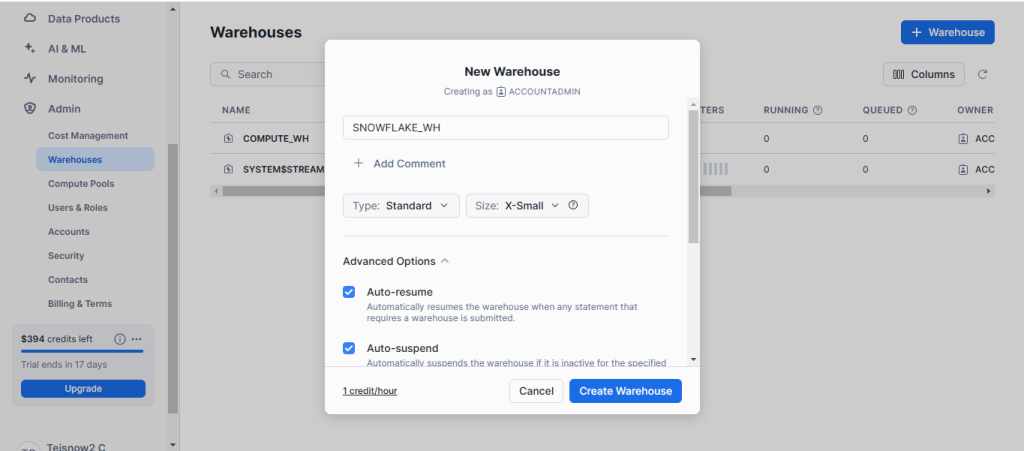

Set up your first data warehouse

- In the Snowflake console, click on the “Worksheets” tab to open the SQL editor.

- Run the following command to create your first data warehouse:

CREATE WAREHOUSE MY_FIRST_WAREHOUSE;

Replace MY_FIRST_WAREHOUSE with a name of your choice.

- After creating the data warehouse, you can switch to it by running:

USE WAREHOUSE MY_FIRST_WAREHOUSE;

- Now you’re ready to start creating databases, tables, and loading data into your Snowflake data warehouse.

Congratulations! You’ve successfully set up your first Snowflake data warehouse. From here, you can explore the various features and capabilities of Snowflake, such as data ingestion, transformation, and querying, to unlock the power of cloud-based data warehousing.

Techniques for loading data into Snowflake

Bulk Data Loading

The COPY command is a powerful tool for loading data into Snowflake, including flat files (CSV, JSON, Parquet, etc.), database tables, and even semi-structured data sources like Amazon Kinesis or Apache Kafka streams. It supports parallel loading, automatic data conversion, and flexible file formatting options.

Continuous data ingestion

For real-time or near real-time data ingestion scenarios, Snowflake offers Snowpipe, a continuous data ingestion service. Snowpipe automatically ingests data from cloud storage locations or streaming services like Apache Kafka, Amazon Kinesis, or Google PubSub into Snowflake tables or views.

Snowpipe supports auto-ingest, auto-create, and auto-repair features, making it a low-maintenance solution for continuous data ingestion. It also offers advanced features like data buffering, error handling, and data transformation capabilities.

Integrating with various data sources

Snowflake provides native connectors and drivers for integrating with a wide range of data sources, including databases (Oracle, MySQL, PostgreSQL), data warehouses (Redshift, BigQuery), and NoSQL stores (MongoDB, Cassandra). These connectors enable efficient data transfer and transformation between Snowflake and other systems.

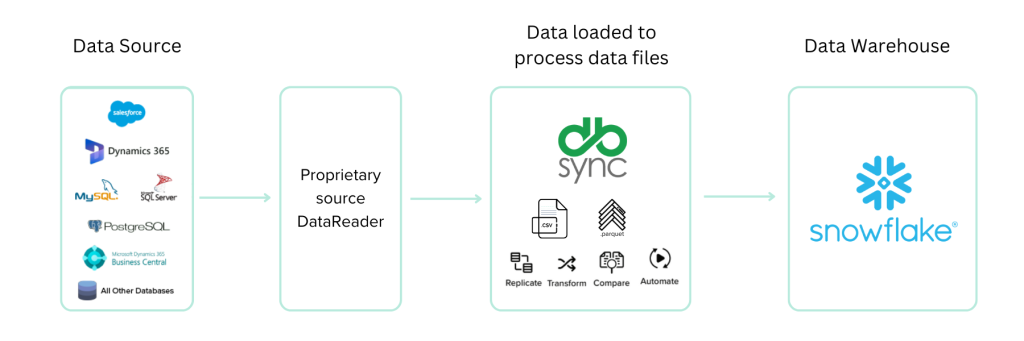

Additionally, Snowflake supports integration with data replication platforms such as DBSync, allowing for automating ELT pipelines from various data sources.

Handling data transformations

Snowflake’s powerful SQL engine and support for semi-structured data make it well-suited for handling complex data transformations. With its VARIANT data type, Snowflake can store and query semi-structured data like JSON and Avro without the need for explicit schema definitions.

Snowflake also supports advanced SQL capabilities like window functions, pivot/unpivot operations, and user-defined functions (UDFs) written in JavaScript or SQL. These features enable sophisticated data transformations and analytics directly within the data warehouse.

For more complex transformations, Snowflake integrates with popular data processing frameworks like Apache Spark and Apache NiFi, allowing users to leverage their existing skills and tools within the Snowflake ecosystem.

Best Practices for data modeling, schemas, and tables in Snowflake

When working with Snowflake, it’s crucial to follow best practices for designing data models, defining schemas, and creating tables to ensure optimal performance, maintainability, and scalability. Here are some key considerations:

Data modeling

- Normalization: Normalize your data models to reduce redundancy and improve data integrity. However, avoid over-normalization, as it can lead to performance issues due to excessive joins.

- Star Schema: For analytical workloads, consider using a star schema or a variation like the snowflake schema. These models separate dimensional data from fact data, enabling efficient querying and aggregations.

- Denormalization: In some cases, denormalizing data by duplicating or pre-joining data can improve query performance, especially for frequently accessed data.

Schemas

- Naming Conventions: Establish clear naming conventions for schemas, tables, and columns to ensure consistency and maintainability across your organization.

- Logical Grouping: Group related tables and views into separate schemas based on their functional areas or domains, making it easier to manage and secure access.

- Security and Access Control: Leverage Snowflake’s role-based access control to manage permissions at the schema level, ensuring data security and governance.

Tables

- Clustering Keys: Define clustering keys based on your most common query patterns to improve query performance by co-locating related data on the same cloud storage locations.

- Column Ordering: Order columns in your tables based on their importance and usage patterns, as this can impact query performance and storage efficiency.

- Data Types: Choose appropriate data types for your columns to optimize storage and performance. Avoid using excessive precision or scale for numeric data types unless necessary.

- Partitioning: Partition large tables based on frequently filtered or joined columns to improve query performance and enable efficient data pruning.

- Compression: Enable automatic clustering and compression in Snowflake to reduce storage requirements and improve query performance.

- Metadata Management: Maintain accurate and up-to-date table and column descriptions, comments, and other metadata to enhance data governance and discoverability.

By following these best practices, you can create efficient, scalable, and maintainable data models, schemas, and tables in Snowflake, enabling faster queries, better data quality, and easier data management.

Writing SQL queries and leveraging advanced analytics in Snowflake

Snowflake’s SQL querying capabilities are robust and optimized for big data workloads. You can write standard SQL queries using a wide range of SQL constructs like SELECT, JOIN, WINDOW functions, and more. Snowflake also provides advanced SQL features like semi-structured data processing with FLATTEN and LATERAL FLATTEN, query semi-structured data like JSON, XML, and Avro, and leverage machine learning with custom UDFs.

One of Snowflake’s key strengths is its ability to automatically optimize and tune query performance. It uses a cost-based optimizer to generate efficient query plans, and its massive parallel processing architecture allows it to scale compute resources seamlessly for complex queries. Features like clustering keys, result caching, and automatic data repartitioning help improve performance.

For advanced analytics, Snowflake provides first-class support for Python, Java, and external function development. You can deploy machine learning models or custom logic as secure user-defined functions. Additionally, Snowflake’s integration with tools like AWS SageMaker, Databricks, and Snowpark allows you to build and operationalize end-to-end data science workflows entirely within the Snowflake ecosystem.

Securing data in Snowflake

Setting Up Roles and Permissions

Snowflake’s role-based access control (RBAC) model allows you to manage access privileges and permissions at a granular level. Roles are a collection of privileges that define what operations a user can perform within Snowflake. You can create custom roles tailored to your organization’s needs, or use predefined roles provided by Snowflake.

To set up roles and permissions, follow these steps:

- Define roles: Identify the different roles required in your organization, such as data analysts, data engineers, and administrators.

- Assign privileges: Assign the appropriate privileges to each role based on the principle of least privilege. This ensures that users have only the necessary permissions to perform their tasks.

- Grant roles to users: Assign roles to individual users or groups of users based on their responsibilities and access requirements.

- Review and maintain roles: Regularly review and update roles as your organization’s needs evolve, ensuring that access privileges remain aligned with business requirements.

Implementing Authentication and Encryption

Snowflake provides robust authentication and encryption mechanisms to ensure the security and integrity of your data.

Authentication:

- Single Sign-On (SSO): Snowflake supports various SSO providers, such as Okta, Azure Active Directory, and SAML 2.0, allowing users to authenticate with their existing corporate credentials.

- Multi-Factor Authentication (MFA): Enable MFA for an additional layer of security, requiring users to provide a second form of authentication, such as a one-time password or biometric verification.

Encryption:

- Data Encryption at Rest: Snowflake automatically encrypts all data at rest using industry-standard AES-256 encryption, ensuring that your data is protected even if the storage media is compromised.

- Data Encryption in Transit: All data transmitted between Snowflake and your applications is encrypted using TLS 1.2 or higher, protecting your data from unauthorized access during transit.

- Key Management: Snowflake provides options for managing encryption keys, including using Snowflake-managed keys or bringing your own keys (BYOK) for added control and compliance.

By implementing these security measures, you can ensure that your data in Snowflake is protected from unauthorized access, modification, and disclosure, meeting industry-standard security and compliance requirements.

Techniques for optimizing query performance, resource management, and scaling Snowflake

Query optimization

Query Rewrite and Optimization Rules: Snowflake automatically rewrites and optimizes queries to improve performance. It applies a set of optimization rules, such as predicate pushdown, join reordering, and partition pruning, to generate an efficient query plan.

Clustering Keys: Defining clustering keys on tables can significantly improve query performance, especially for queries involving range filters or joins on the clustering key columns. Snowflake automatically clusters data based on the specified keys, keeping related data together on the same data clusters.

Result Caching: Snowflake caches the results of queries, allowing subsequent queries on the same or overlapping data to retrieve results from the cache, reducing computation time and improving performance.

Resource management

Multi-Cluster Warehouses: Snowflake allows you to scale compute resources by adding or removing clusters to a virtual warehouse. This enables you to handle varying workloads and adjust resources as needed, ensuring optimal performance and cost-efficiency.

Resource Monitors: Resource monitors help manage and control the consumption of resources, such as credits or warehouses, across different workloads or users. You can set up resource monitors to enforce limits, track usage, and prevent resource contention.

Concurrency Levels: Snowflake allows you to configure the concurrency level for each virtual warehouse, controlling the maximum number of concurrent queries or sessions that can run simultaneously. Adjusting concurrency levels can help optimize resource utilization and prevent resource contention.

Scaling for high concurrency

Auto-Scaling: Snowflake offers auto-scaling capabilities, which automatically add or remove clusters to a virtual warehouse based on workload demands. This feature ensures that resources are scaled up or down dynamically, providing the necessary compute power while optimizing costs.

Multi-Cluster Warehouses: As mentioned earlier, multi-cluster warehouses enable you to scale compute resources by adding or removing clusters. This flexibility allows you to handle high concurrency workloads by increasing the number of clusters, providing more computing power and parallelism.

Workload Management: Snowflake’s workload management features, such as resource monitors and query queuing, help manage and prioritize workloads during high concurrency scenarios. You can set up rules to ensure that critical workloads receive the necessary resources, while less important workloads are queued or throttled.

Partitioning and Clustering: Proper partitioning and clustering strategies can significantly improve query performance and scalability, especially in high concurrency environments. By partitioning data based on common filter conditions and clustering related data together, Snowflake can efficiently prune and process data, reducing resource contention and improving overall system throughput.

Monitoring and troubleshooting Snowflake

Effective monitoring and troubleshooting are crucial for maintaining the performance and reliability of your Snowflake data warehouse. By proactively monitoring your Snowflake usage, you can identify potential issues before they escalate and take necessary actions to resolve them promptly.

Monitoring Snowflake Usage

Snowflake provides various tools and metrics to help you monitor your data warehouse’s performance and usage. Here are some key areas to monitor:

- Query Performance: Monitor the execution time, resource consumption, and concurrency of your queries. Identify slow-running queries and optimize them for better performance.

- Data Storage: Keep track of your data storage usage, including the size of your databases, schemas, and tables. This will help you manage your storage costs and plan for future growth.

- Warehouse Utilization: Monitor the utilization of your virtual warehouses, including the number of running queries, queued queries, and the overall resource consumption.

- Credit Usage: Snowflake’s consumption-based pricing model charges based on the amount of credit used. Monitor your credit usage to ensure you stay within your budget and avoid unexpected costs.

- Failover and Availability: Monitor the availability and failover events of your Snowflake instances to ensure business continuity and minimize downtime.

Identifying and Resolving Issues

When you encounter performance issues or errors in Snowflake, it’s essential to have a structured approach to troubleshooting. Here are some best practices:

- Analyze Query Profiles: Snowflake provides detailed query profiles that can help you identify bottlenecks and inefficiencies in your queries. Analyze these profiles to understand where your queries are spending the most time and resources.

- Review Error Messages: Pay close attention to error messages and log files. Snowflake’s error messages are often informative and can provide valuable insights into the root cause of the issue.

- Leverage Snowflake Support: Snowflake offers excellent support resources, including documentation, knowledge base articles, and a dedicated support team. Don’t hesitate to reach out to Snowflake support for assistance with complex or persistent issues.

- Implement Alerting and Monitoring: Set up alerting and monitoring systems to proactively detect and respond to issues. Snowflake integrates with various monitoring tools, allowing you to create custom alerts and notifications.

Best Practices for Troubleshooting

To streamline your troubleshooting process and ensure efficient problem-solving, consider the following best practices:

- Establish a Troubleshooting Workflow: Define a clear workflow for troubleshooting, including steps for gathering information, analyzing data, and escalating issues when necessary.

- Maintain Comprehensive Documentation: Document your Snowflake environment, including data models, query patterns, and any customizations or integrations. Well-documented systems are easier to troubleshoot and maintain.

- Leverage Monitoring and Logging Tools: Implement monitoring and logging tools to collect and analyze relevant data for troubleshooting purposes. This data can provide valuable insights and help you identify root causes more quickly.

- Collaborate and Share Knowledge: Foster a culture of knowledge sharing within your team. Encourage team members to document their troubleshooting experiences and share best practices, allowing others to learn from past issues and solutions.

By following these monitoring, troubleshooting, and best practices, you can ensure the smooth operation of your Snowflake data warehouse, minimize downtime, and maintain optimal performance for your data-driven applications.

Integrating Snowflake with third-party applications

Snowflake is designed to seamlessly integrate with a wide range of popular business intelligence (BI) tools, data science platforms, and other third-party applications. This integration allows organizations to leverage Snowflake’s powerful data warehousing capabilities while utilizing the tools and platforms they are already familiar with.

Learn more about how DBSync integrates Snowflake with other tools.

Connecting to BI tools

Snowflake offers native connectivity to leading BI tools such as Tableau, Power BI, and Looker. This integration enables users to directly connect their BI tools to Snowflake and perform advanced data analysis, visualization, and reporting without the need for complex data extraction processes. Users can leverage Snowflake’s scalable computing power and optimized data storage to accelerate their BI workflows and gain faster insights.

Integrating with data science platforms

Snowflake also supports seamless integration with popular data science platforms like Databricks, Datarobot, and Amazon SageMaker. Data scientists can leverage Snowflake’s secure and governed data platform to access and analyze large datasets, build and deploy machine learning models, and operationalize their data science workflows. This integration streamlines the entire data science lifecycle, from data exploration to model deployment and monitoring.

Connecting to third-party applications

Snowflake provides a range of connectors and APIs that enable integration with various third-party applications and services. For example, organizations can connect Snowflake to their customer relationship management (CRM) systems, marketing automation platforms, or enterprise resource planning (ERP) systems. This integration allows for seamless data exchange, enabling organizations to consolidate and analyze data from multiple sources within Snowflake’s data warehouse.

Why you need 3rd party integrations for Snowflake

Integrating Snowflake with third-party applications offers several benefits:

- Streamlined Workflows: By connecting Snowflake to the tools and platforms already in use, organizations can streamline their data analysis and processing workflows, reducing the need for complex data movement and transformation processes.

- Improved Productivity: With seamless integration, users can leverage the familiar interfaces and functionalities of their preferred tools while taking advantage of Snowflake’s powerful data warehousing capabilities, ultimately improving productivity and efficiency.

- Centralized Data Management: Snowflake acts as a centralized data repository, enabling organizations to consolidate and manage data from various sources in a secure and governed environment.

- Enhanced Collaboration: By integrating with different tools and platforms, Snowflake facilitates collaboration among diverse teams, such as data analysts, data scientists, and business users, enabling them to work together more effectively on data-driven projects.

Snowflake’s commitment to open standards and extensive partner ecosystem ensures that organizations can leverage their existing technology investments while taking advantage of Snowflake’s powerful data warehousing capabilities.

Explore practical use cases and success stories

E-commerce: Enabling real-time analytics for personalized marketing campaigns

Snowflake’s data warehousing capabilities have been leveraged by organizations across various industries to drive data-driven insights and decision-making. One notable success story comes from a leading e-commerce company that faced challenges in managing and analyzing its massive volumes of customer data. By implementing Snowflake, they were able to consolidate data from multiple sources into a centralized data warehouse, enabling real-time analytics and personalized marketing campaigns. This resulted in a significant increase in customer engagement and revenue.

Healthcare: Consolidate and analyze disparate patient data for better outcomes

Another practical use case involves a healthcare provider that needed to integrate patient data from various systems, including electronic health records (EHRs), claims data, and clinical trial data. With Snowflake, they created a secure and compliant data warehouse, enabling researchers and healthcare professionals to access and analyze patient data seamlessly. This facilitated better patient outcomes, improved clinical decision-making, and accelerated medical research.

Financial Services: Analyze customer data to offer personalized products

In the financial services industry, a major bank leveraged Snowflake’s data warehousing capabilities to gain a comprehensive view of its customers’ financial activities. By integrating data from multiple sources, including transaction records, customer profiles, and market data, they could perform advanced analytics and risk assessments. This enabled them to offer personalized financial products, enhance fraud detection, and comply with regulatory requirements more effectively.

Retail: Real-time analytics to optimize inventory and more

Snowflake’s scalability and performance have also proven valuable for organizations in the retail sector. One large retail chain utilized Snowflake to consolidate data from various sources, including point-of-sale systems, inventory management, and customer loyalty programs. By analyzing this data in real-time, they could optimize inventory levels, identify trends, and deliver targeted promotions, resulting in increased sales and customer satisfaction.

These practical use cases and success stories demonstrate the versatility and power of Snowflake’s data warehousing solutions. Organizations across diverse industries have leveraged its capabilities to gain valuable insights, drive innovation, and achieve their strategic goals.

Staying up to date with Snowflake: Resources and Support

For those seeking to deepen their knowledge and stay up-to-date with the latest developments in data warehousing with Snowflake, there are numerous valuable resources available. Here’s a curated list of recommended resources, documentation, forums, and communities:

Official Snowflake Documentation: Snowflake’s comprehensive documentation is a treasure trove of information, covering everything from getting started guides to advanced topics, best practices, and troubleshooting. It’s regularly updated and includes tutorials, sample code, and reference materials.

Snowflake Community: The Snowflake Community is an active forum where users can ask questions, share knowledge, and collaborate with fellow data professionals. It’s a great place to connect with experts, find solutions to common challenges, and stay informed about upcoming events and releases.

Snowflake Blog: The official Snowflake Blog is a valuable source of news, product updates, and insightful articles written by Snowflake’s engineers and data experts. It covers a wide range of topics, from technical deep dives to industry trends and use cases.

Snowflake Virtual Hands-on Lab: Snowflake offers a virtual hands-on lab environment, allowing users to gain practical experience with their platform without the need for local setup or configuration. This interactive learning resource is ideal for those who prefer a hands-on approach.

Snowflake on YouTube: Snowflake’s official YouTube channel features a wealth of video content, including product demos, webinars, and educational series. It’s a great resource for visual learners and those who prefer video tutorials and presentations.

Snowflake Certifications: For professionals seeking to validate their Snowflake expertise, Snowflake offers various certification tracks, such as the Snowflake Data Cloud Certified Associate and Snowflake Data Cloud Certified Professional certifications.

Snowflake User Groups: Many cities and regions have active Snowflake User Groups, where data professionals can network, share experiences, and learn from each other. These groups often host meetups, workshops, and presentations by Snowflake experts and power users.

Snowflake Partner Resources: Snowflake has a robust partner ecosystem, and many partners offer additional resources, such as training courses, consulting services, and specialized tools or integrations.

By leveraging these resources, you can stay up-to-date with the latest advancements, connect with the Snowflake community, and continually expand your knowledge and skills in data warehousing with Snowflake.